Abstract

Indirect Time-of-Flight (ToF) imaging is widely applied in practice for its superiorities on cost and spatial resolution. However, lower signal-to-noise ratio (SNR) of measurement leads to larger error in ToF imaging, especially for imaging scenes with strong ambient light or long distance. In this paper, we propose a Fisher-information guided framework to jointly optimize the coding functions (light modulation and sensor demodulation functions) and the reconstruction network of iToF imaging, with the supervision of the proposed discriminative fisher loss. By introducing the differentiable modeling of physical imaging process considering various real factors and constraints, e.g., light-falloff with distance, physical implementability of coding functions, etc., followed by a dual-branch depth reconstruction neural network, the proposed method could learn the optimal iToF imaging system in an end-to-end manner. The effectiveness of the proposed method is extensively verified with both simulations and prototype experiments.

Method

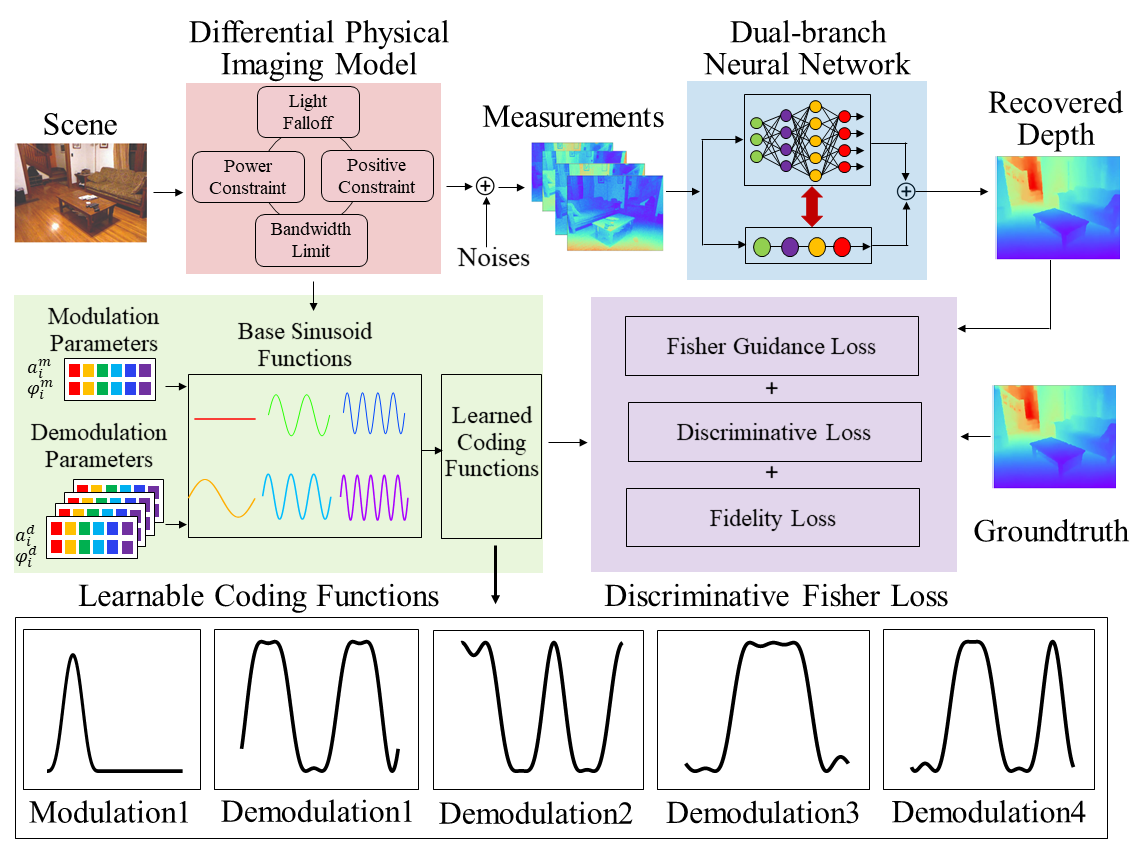

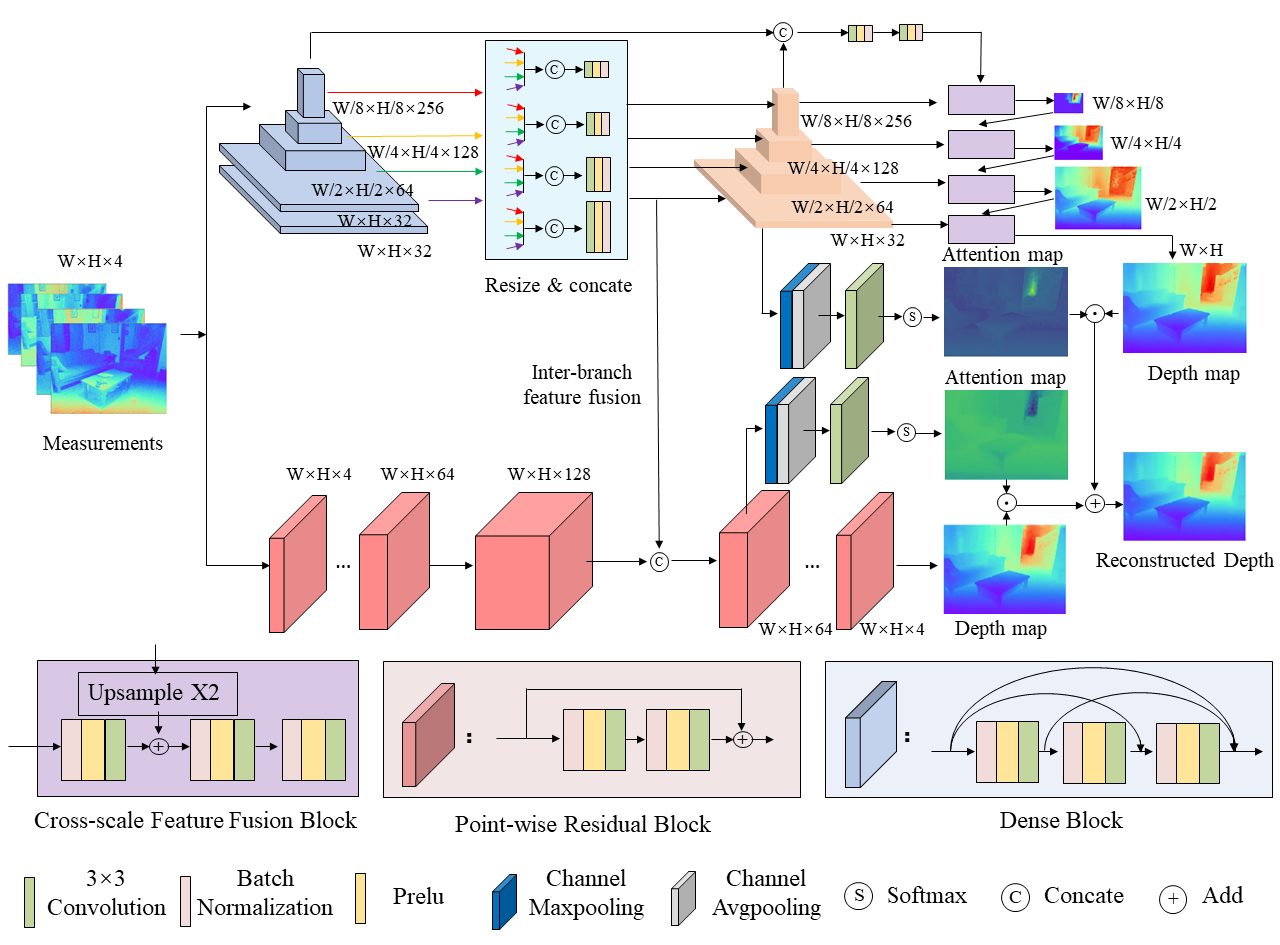

We propose an information theory guided framework to jointly optimize the coding functions and the reconstruction neural network of iToF imaging in an end-to-end manner, with the proposed discriminative fisher loss. Specifically, we formulate the iToF imaging process with a differential physical imaging model with learnable coding functions, taking the physical implementation constraints into consideration, to learn the physical implementable modulation and demodulation functions. Followed by the imaging model, a dual branch depth reconstruction neural network is proposed. We constraint the coding functions with the proposed discriminative fisher loss to maximize the information about depth that could be encoded with the coding functions of iToF imaging. We build a prototype iToF imaging system and implement the noise tolerant iToF imaging with the optimized coding functions and the reconstruction network. Finally, we verify the SOTA performance of the proposed iToF imaging method, both in simulation and in real captured data.

Figure 1. Overview of the proposed fisher information guided learned iToF imaging framework.

Figure 2. Dual-branch depth reconstruction network.

Experiments

Synthetic Assessment

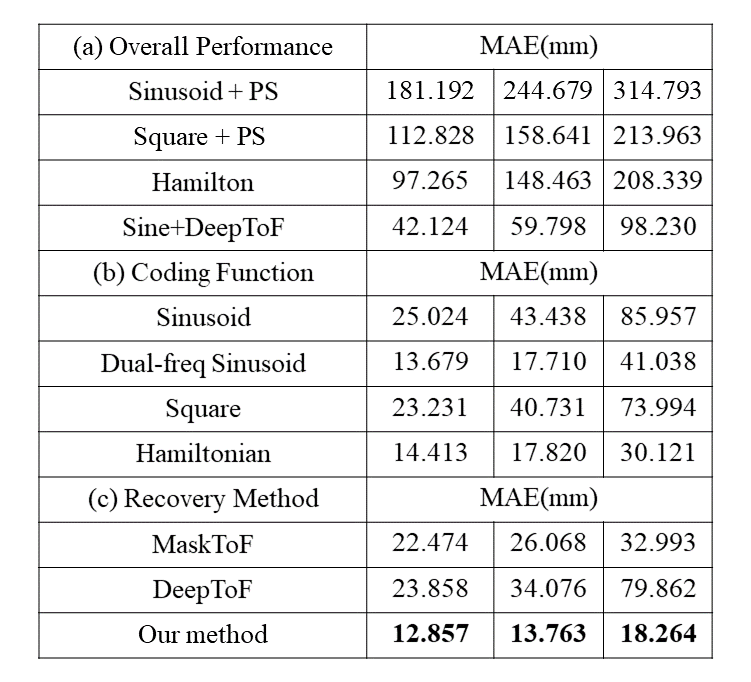

Table 1. Quantitative comparison in terms of the overall performance, coding functions, and reconstruction methods with respect to three different noise settings from the 2nd to 4th column.

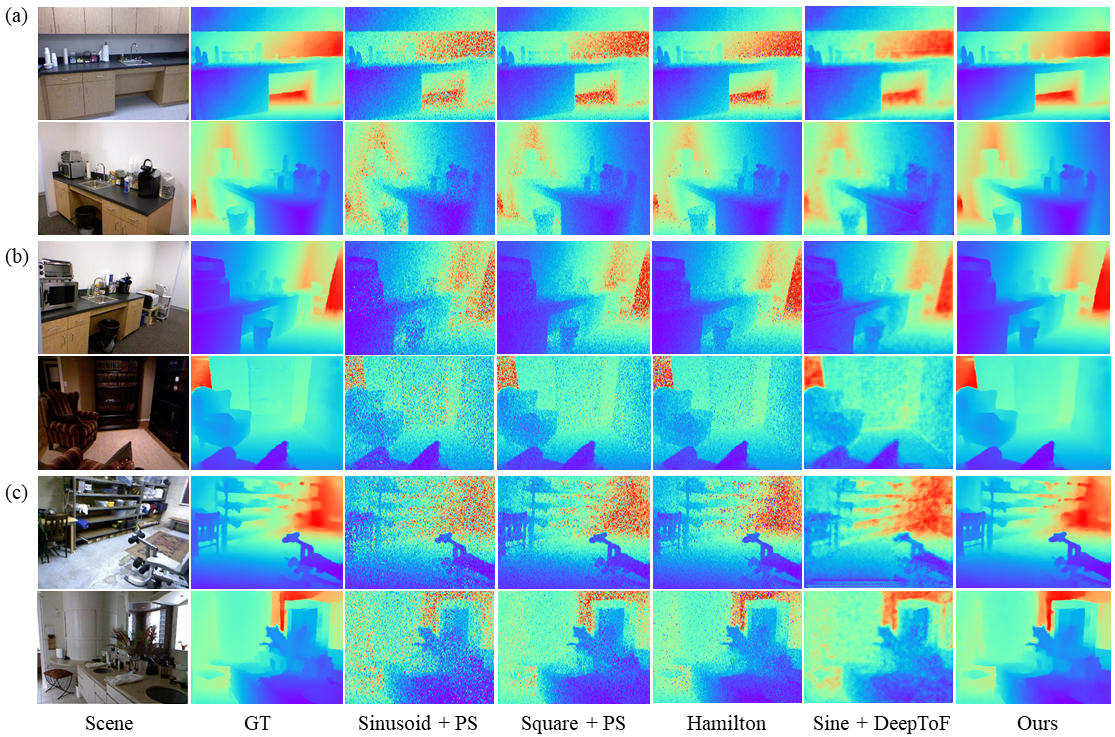

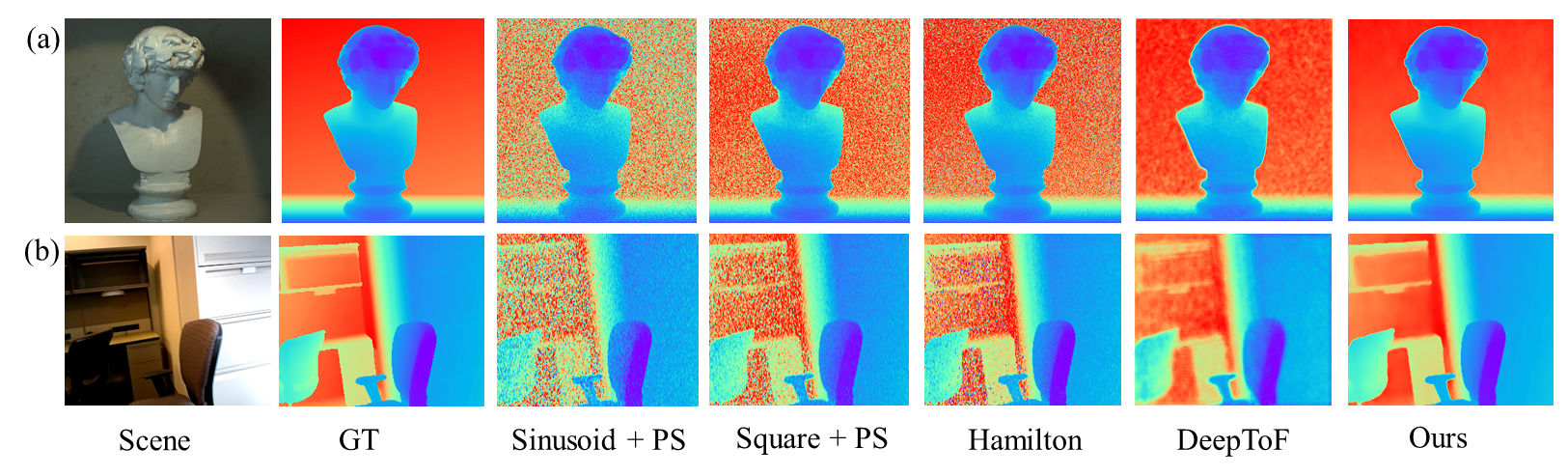

Figure 3. Overall comparisons with other iToF methods in three different noisy scenarios on NYU-V2 dataset.

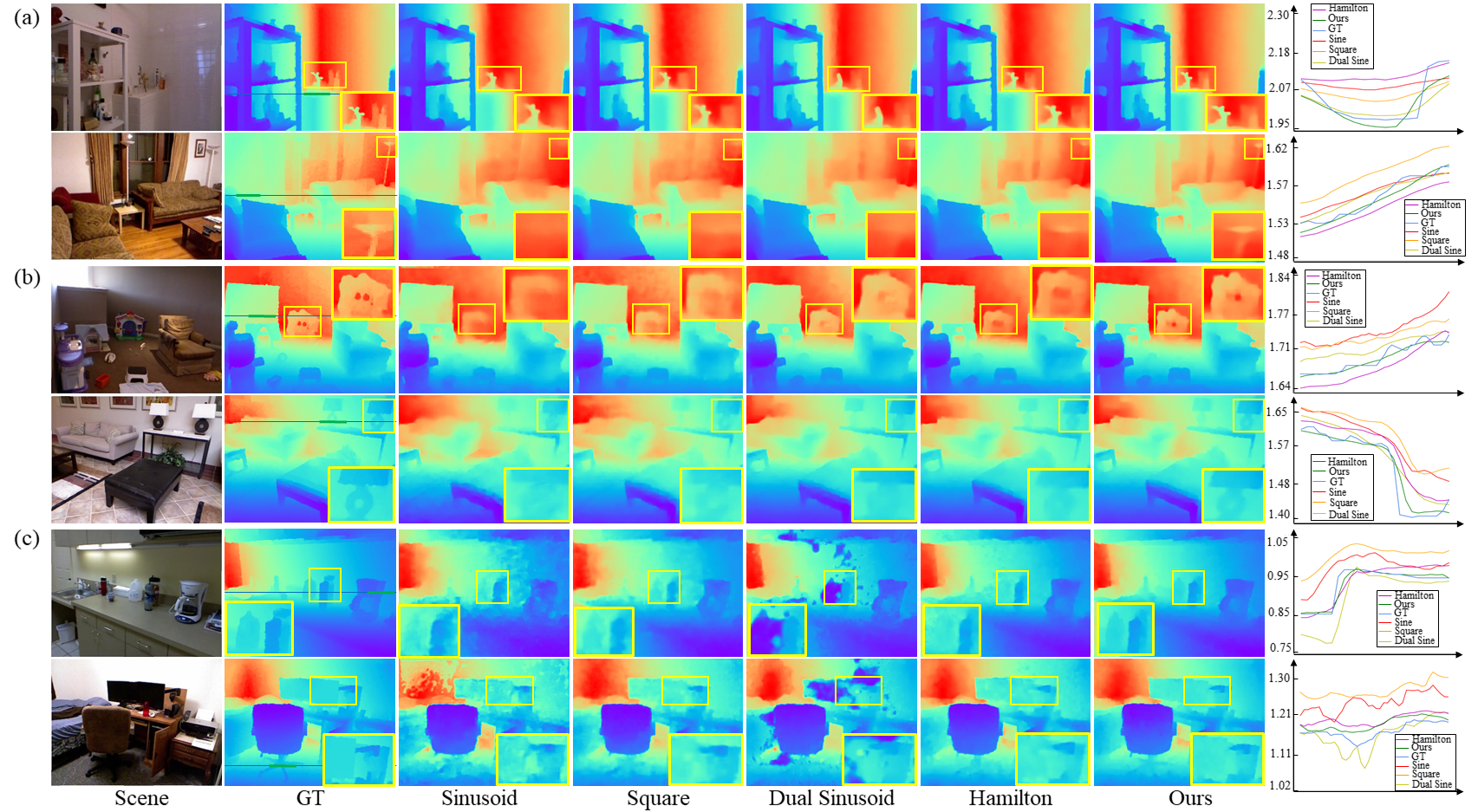

Figure 4. Depth reconstruction results on (a)4D Light Field dataset and (b)SUN RGB-D dataset.

Figure 5. Comparisons with other coding functions in three different noisy scenarios.

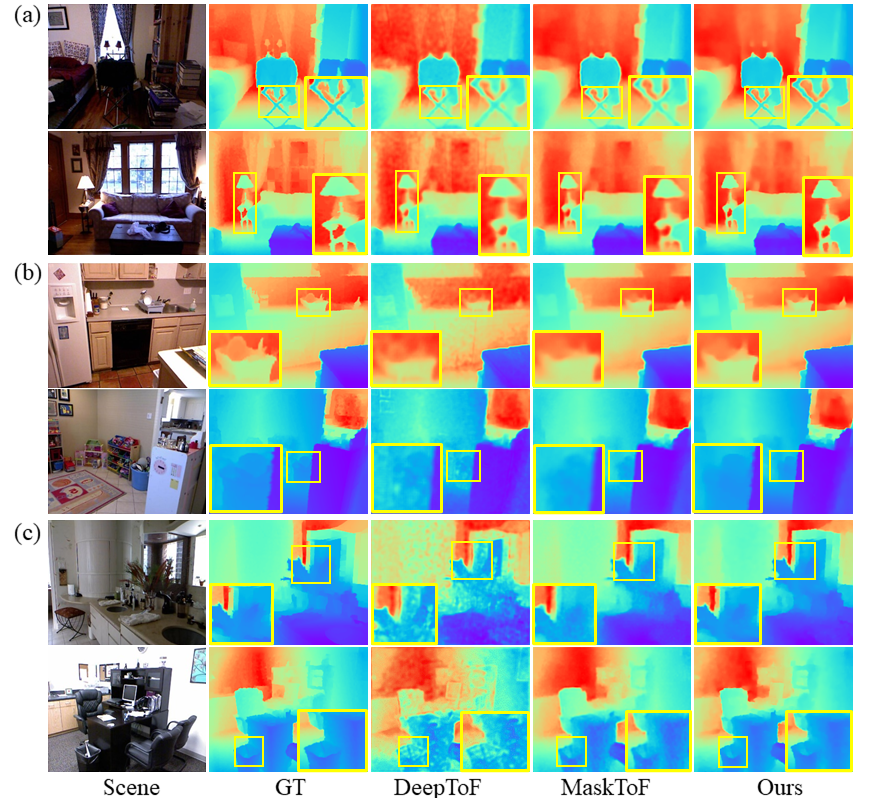

Figure 6. Comparisons with other depth reconstruction networks in three different noisy scenarios.

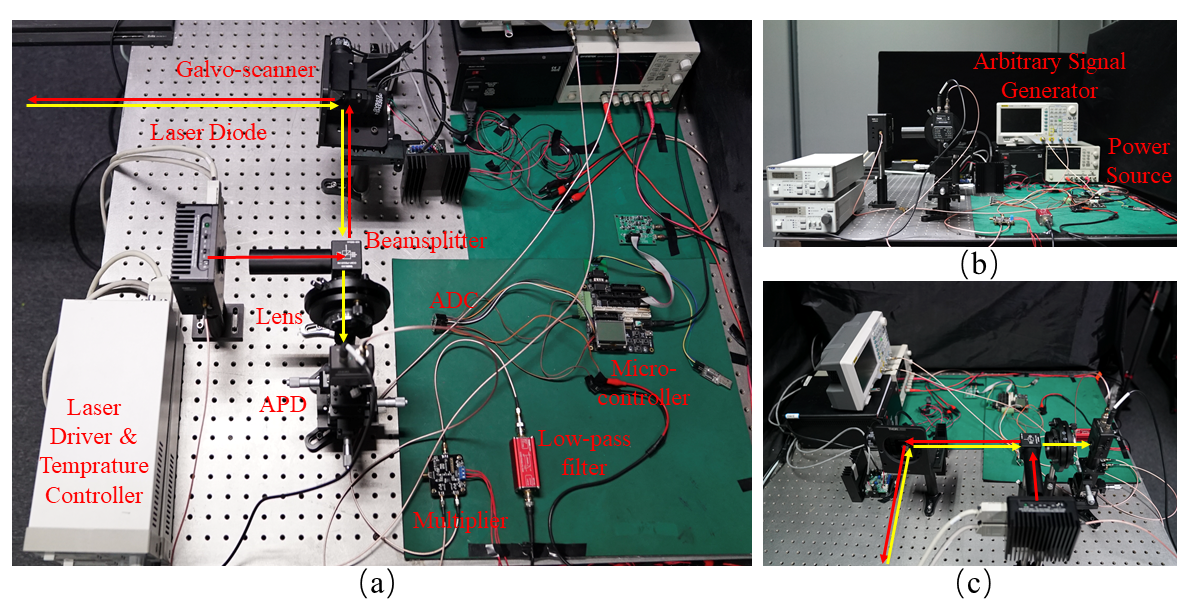

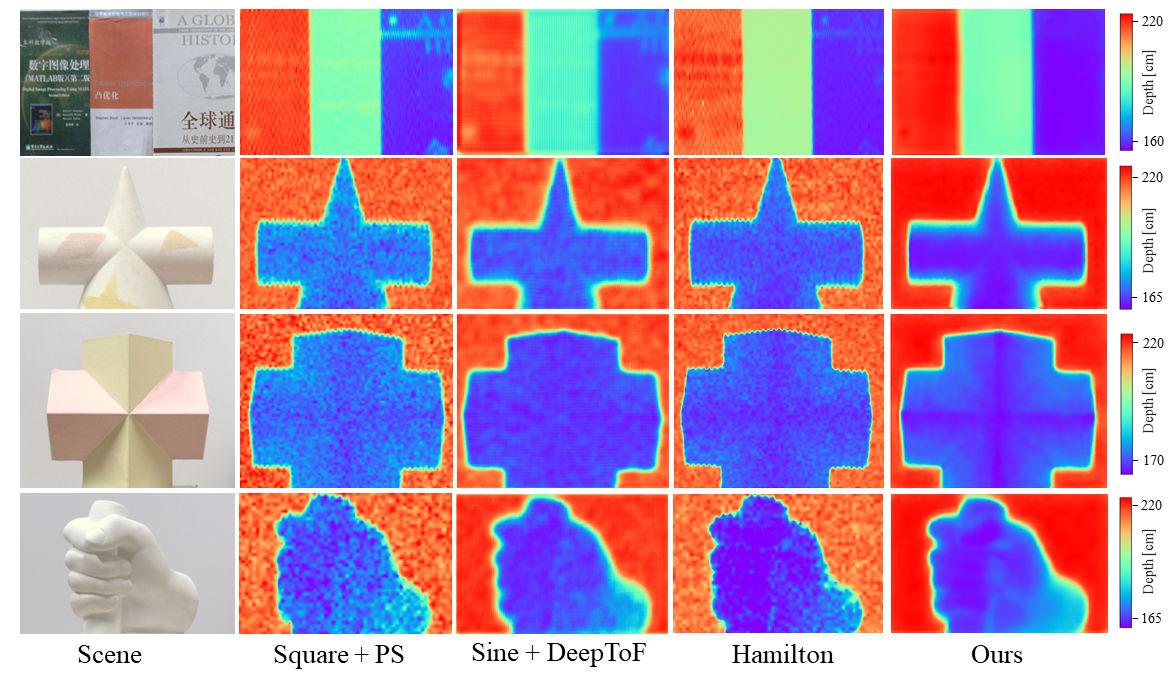

Physical Experiment

Figure 7. Experimental system, (a)-(c) top, front, side views.

Figure 8. Performance comparisons in physical experiments.

More Details

- File

- Presentation

Bibtex

@InProceedings{Li_2022_CVPR,

title = {Fisher Information Guidance for Learned Time-of-Flight Imaging},

author = {Li, Jiaqu and Yue, Tao and Zhao,Sijie and Hu, Xuemei},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages={16334-16343}

}